Smartphones take on the responsibility of how we remember and visualize moments. While the results can sometimes be impressive, they are capable of altering our perception of reality, as reported by the BBC.

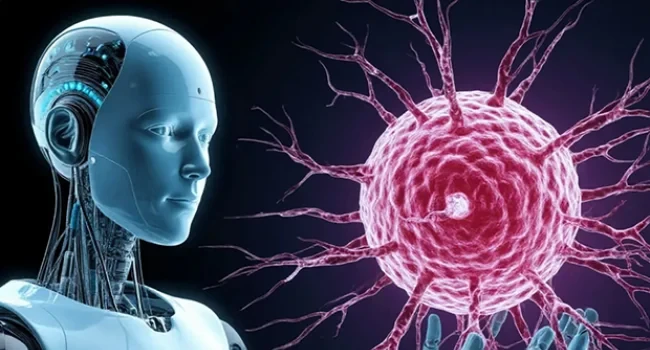

If you have ever tried to capture the Moon with your phone, you probably ended up disappointed with the result, unless it was a Samsung Galaxy. These models feature a "100x zoom" capability that allows for stunning shots of the Moon, although with certain caveats: the Moon images from Samsung may actually be faked.

One Reddit user demonstrated this by holding a Samsung phone up to a blurry image of the Moon on a computer screen. The phone easily took a clear shot, brimming with craters and shadows that were not present in the original photograph. Samsung calls this a "detail enhancement feature," but essentially, the company has trained AI to recognize the Moon and add details when the camera cannot do so.

While not all devices have such a feature, every press of the camera button triggers complex algorithms and data processing tools that operate based on artificial intelligence. These systems can perform trillions of operations before the photograph is saved to the gallery.

All these technologies are designed to produce beautiful and, in most cases, accurate images. However, in extreme situations, some smartphones apply AI-based enhancements that can significantly differ from reality. The next time you take a photo, consider: is your camera capturing reality or trying to change it?

“This is called computational photography,” explains Ziv Attar, CEO of Glass Imaging, who worked on the portrait mode for the iPhone. “Your phone does much more than just gather light hitting the camera sensors. It tries to guess what the image would look like if the camera were more advanced, and then recreates it for you,” he adds.

A Samsung representative claims that “AI-based features are designed to enhance image quality while maintaining its authenticity.” Users can disable these features according to their preferences.

However, even with AI editors turned off, algorithms still continue to process your photos.

How does shooting happen?

“When you press the 'Capture' button, your phone takes not one but several images — from four to ten in normal lighting,” says Attar. The device combines these frames to create a better shot than a single frame, with some of them possibly being duplicates while others focus on different parts of the image.

These processes correct issues that should not be visible to the average viewer. For example, noise reduction smooths out grainy textures, while color correction makes the image look more natural. High Dynamic Range (HDR) is also used, which combines shots taken under different lighting conditions to preserve details in shadows and bright areas. Smartphones also employ various methods to eliminate blurriness.

For instance, the iPhone uses Deep Fusion, based on AI, which processes many of the aforementioned methods and can also recognize objects in the image and handle them differently. “This is very high-level segmentation,” explains Attar.

The result is sharp photos under good conditions. However, some critics and discerning photographers believe that modern smartphones go too far, creating images with an unnatural texture reminiscent of watercolor paintings. Fixing flaws sometimes leads to strange distortions that look like hallucinations when the image is enlarged. Some users even revert to older phone models or use a second phone solely for photography.

An Apple representative emphasizes: “We aim to help users capture real moments so they can relive their memories. While we see potential in artificial intelligence, we also value the traditions of photography and believe it should be handled with care. Our goal is to provide users with devices that take authentic and stunning photos, as well as tools for personalization.”

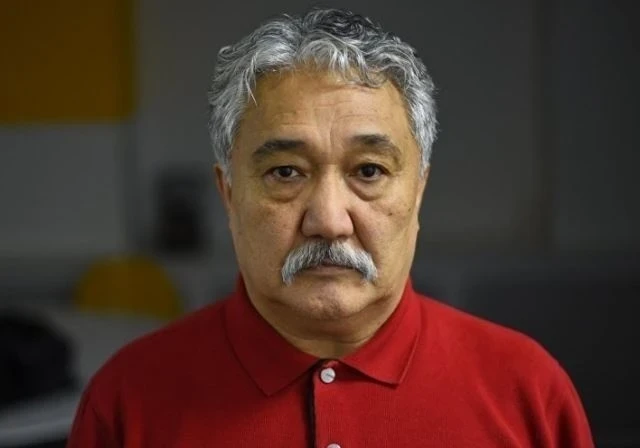

On the positive side, much of the editing can be done manually if you have the experience and patience. Now, “instead of dealing with numerous settings, automation does it for you,” notes Lev Manovich, a professor of digital culture at the City University of New York. “Some features that were once available only to professionals are now accessible to amateurs.”

Nevertheless, your phone often makes creative decisions about how moments are captured, and users may not be aware of this. On some devices, AI does much more than just adjust settings.

“Smartphone manufacturers really want the shots to reflect what people see. They are not trying to create fake images,” notes Rafal Mantiuk, a professor of graphics at the University of Cambridge. “However, there is a lot of creative freedom in the image processing. Each phone has its own style, just like different photographers.”

“This is pure hallucination”

In these discussions, there is an implicit standard: the notion that a “real” photograph should look like shots taken on film. This comparison is likely not entirely accurate. Every camera has included certain image processing steps from the very beginning. The term “AI” may evoke negative associations, but in many cases, algorithms correct flaws inherent in small lenses and sensors in smartphones.

However, some features may go beyond what is possible.