According to a conducted study, brief interaction with a trained chatbot turned out to be four times more effective in persuasion compared to traditional political advertising aired on television.

Authors of the study: Steven Lee Myers and Teddy Rosenbluth

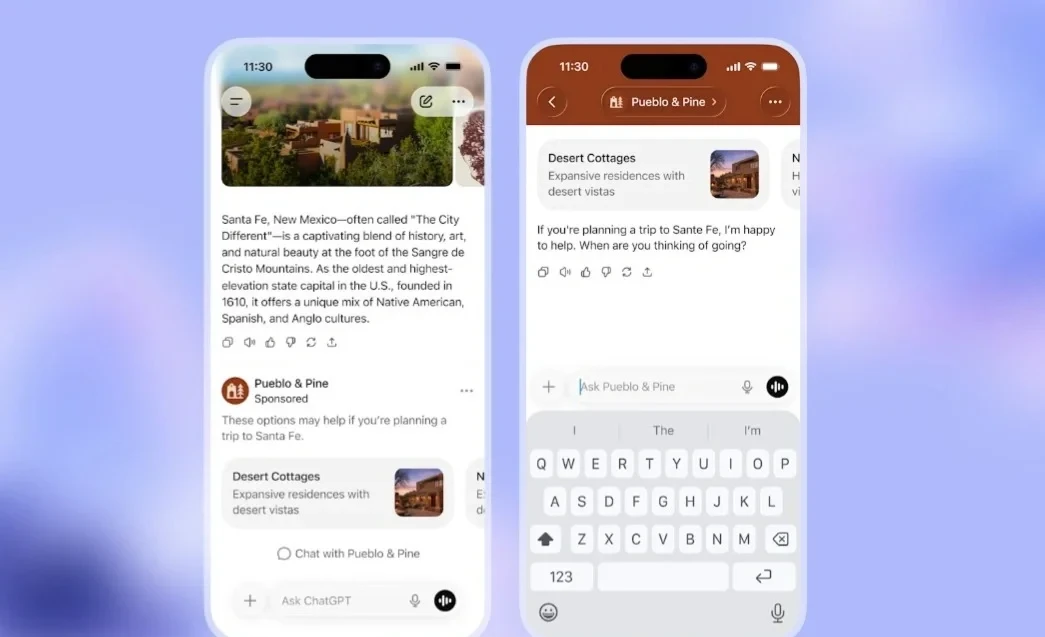

Chatbots can assist in trip planning, help with fact-checking, and provide advice. But can they also influence users' political views?

Two recent studies published in the journals Nature and Science have shown that even brief communication with an AI-based chatbot can change people's opinions about political candidates or issues. One of the studies found that interaction with such a bot was nearly four times more persuasive than advertisements broadcast on television during the recent U.S. presidential elections.

These findings emphasize that AI can become an important tool for candidates and technologists, influencing voters' opinions, even those who have already made their preferences clear. This is particularly relevant ahead of the crucial midterm elections in the U.S. next year.

“This will be the cutting edge of innovation in political campaigns,” said David G. Rand, a professor of computer science and marketing at Cornell University, who participated in both studies.

The experiments were conducted using various commercial chatbots, such as ChatGPT from OpenAI, Llama from Meta, and Gemini from Google. Researchers tasked the bots with convincing participants to support a specific candidate or political issue.

With the growing popularity of chatbots, scientists express concerns about the manipulation of political views using AI. While many strive to remain politically neutral, some, like the Grok bot on X, clearly reflect the opinions of their creators.

The authors of the article in Science warn that as technology advances, AI could provide “influential players with a significant advantage in persuasion.” It is important to note that the models used in the studies often aim to please the user, which sometimes leads to false claims and unfounded arguments.

During the experiment, researchers using AI fact-checking found that the accuracy of claims made by bots supporting right-wing politicians was significantly lower compared to those supporting left-wing politicians.

The analysis conducted by researchers from the UK and the US covered nearly 77,000 voters in the UK on more than 700 political issues, including tax policy and gender relations, as well as attitudes towards Russian President Vladimir Putin.

In the Nature study, which included respondents from the U.S., Canada, and Poland, chatbots aimed to convince people to support one of the two main candidates in the upcoming elections in those countries in 2024-2025.

About one in ten voters in Canada and Poland admitted that conversations with bots indeed influenced their opinion about the candidate they voted for. In the U.S., where the election margin leaned slightly towards Donald Trump, this percentage was one in 25.

In one conversation about trust in candidates, a chatbot, while conversing with a Trump supporter, mentioned Kamala Harris's achievements in California, including the establishment of the Bureau of Juvenile Affairs and the promotion of the Consumer Protection Act. It also noted that the Trump Organization, owned by Trump, had been accused of tax fraud and fined $1.6 million.

At the end of the experiment, the Trump supporter expressed doubts, writing: “If I had doubts about Harris, now I really believe in her and may vote for her.”

The chatbot leaning towards supporting Trump also demonstrated persuasiveness.

“Trump's commitment to his campaign promises, such as tax cuts and deregulating the economy, was evident,” he explained to a voter who was leaning towards Harris. “His actions, regardless of their outcomes, demonstrate a certain degree of reliability.”

“I should have been less biased against Trump,” the voter admitted.

Political technologists face the challenge of using trained chatbots to influence skeptical voters, especially in the context of deep partisan divides.

“In real-world conditions, it will be extremely difficult to convince people even to start a conversation with such chatbots,” believes Ethan Porter, a researcher at George Washington University who did not participate in this study.

Researchers suggest that the persuasiveness of chatbots may be related to the large amount of evidence they present, even if it is not always accurate. This hypothesis was tested during experiments where bots were instructed not to use facts in their arguments, which significantly reduced their persuasiveness (in one test, it decreased by almost half).

The results, based on previous research by David Rand, show that chatbots can help people break out of the vicious cycle of conspiracy theories, debunking the common belief that a person's political position is immune to new information.

“There is a belief that people ignore facts they don’t like,” noted Rand. “Our work shows that this is not the case, as many tend to think.”

Original: The New York Times