Recently, there has been a growing interest among tech billionaires in building or acquiring secure shelters and remote residences that can be seen as "apocalyptic insurance." In this context, figures such as Mark Zuckerberg, Sam Altman, and Reid Hoffman stand out. This raises questions about whether they know something that eludes the public, such as potential disasters related to artificial intelligence, climate change, or war. However, as reported by the BBC, these fears are often exaggerated and lack a factual basis.

Experts argue that discussions about creating artificial general intelligence (AGI) that would be smarter than humans or at least comparable to them resemble marketing ploys more than actual situations. Modern models can process data and mimic reasoning, but they lack understanding and emotions, merely predicting words and patterns. Professor Wendy Hall from the University of Southampton emphasizes that significant breakthroughs are needed to achieve "truly human" intelligence, and while current advancements are impressive, they are still far from human levels.

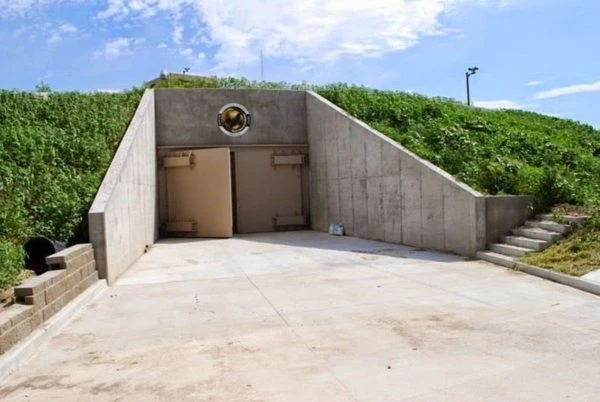

Despite this, the wealthiest continue to invest in protective structures and bunkers. For example, Mark Zuckerberg is reportedly building a large complex in Hawaii that includes a substantial underground shelter, although he denies using the term "doomsday bunker." Reid Hoffman, co-founder of LinkedIn, openly acknowledges having "apocalyptic insurance" through his properties, particularly in New Zealand. In light of this, it is not surprising that society perceives such purchases as preparations by the elite for a potential societal collapse, climate crises, or threats from AI.

Protection is not an option. It is a necessity.

Subscribe to us

Fears in Silicon Valley are also fueled by statements from AI industry representatives. OpenAI co-founder Ilya Sutskever reportedly claimed that "we will definitely build a bunker before launching AGI." Some industry leaders, including Sam Altman, Demis Hassabis, and Dario Amodei, entertain the possibility of AGI emerging within the next ten years. However, skeptics consider this premature and point out that such statements distract from more pressing issues.

Many scientists believe that discussions about "superintelligence" distract from real challenges. Much more important are the systemic risks associated with existing models, such as bias, the spread of misinformation, job threats, and the concentration of power among major platforms. Professor Neil Lawrence emphasizes that the term AGI is misleading, reminding us that intelligence always depends on context. He suggests imagining a universal "artificial vehicle" that can simultaneously fly, swim, and drive—an obviously unachievable ideal. In his view, instead of dreaming of an all-encompassing intelligence, we should focus on how current AI systems impact the economy, politics, and everyday life.

At the same time, governments are demonstrating a variety of approaches to this issue. In the U.S., the Biden administration initially supported mandatory safety testing measures for AI, but later the course changed, and some requirements were deemed obstacles to innovation. In the UK, the AI Safety Institute was established to research risks and publish assessments. Political discussion continues, but the scientific consensus appears less vocal than industry statements.

The BBC notes that "apocalyptic preparedness" among the wealthy is likely driven by a multitude of motives, including genuine fear of potential threats and an element of status display, as the super-rich show that they can afford such caution. Regardless of how these ambitious projects appear, the scientific community does not anticipate the imminent arrival of AGI and "superintelligence." It is far more reasonable to pay attention to how already functioning algorithms are transforming society and whom they benefit as well as pose risks to, rather than preparing for the apocalypse with suitcases in a bunker.