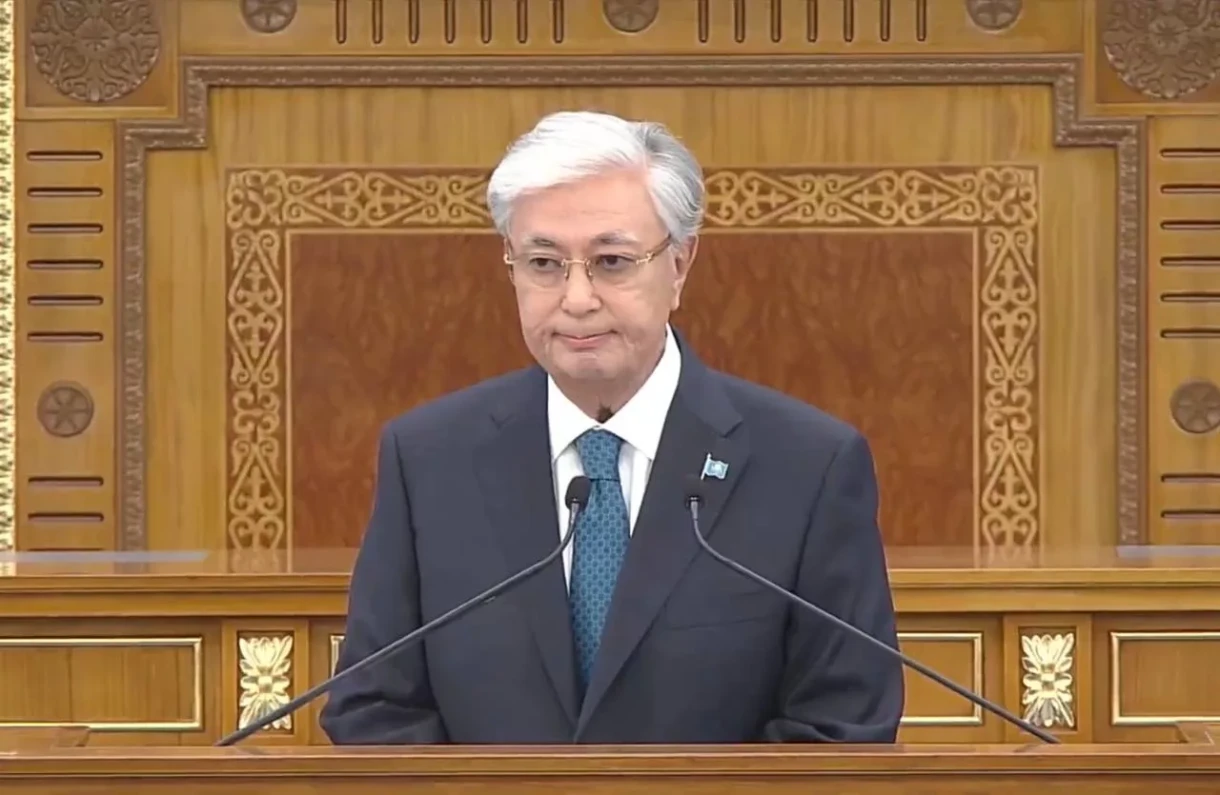

The law, known as the "Fundamental Law on the Development of Artificial Intelligence and the Formation of a Basis for Trust," was adopted with the aim of creating a legal framework to combat misinformation and other dangers arising from the development of AI. This was reported by Kazinform, citing information from Yonhap.

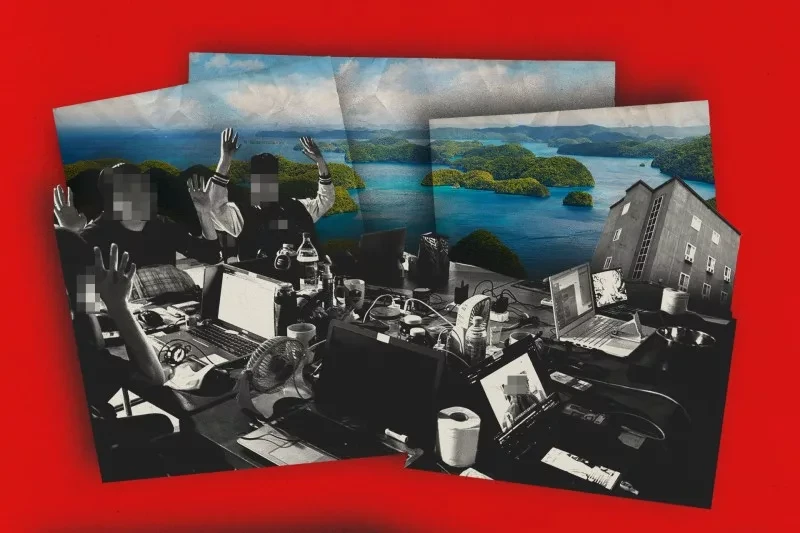

The Ministry of Science of South Korea informed that the government has approved new comprehensive guidelines for the use of AI at the national level. The main goal of the law is to enhance the accountability of developers and companies in combating misinformation and deepfakes that can be generated using AI. The law also empowers the government to conduct inspections and impose penalties for violations.

This document introduces the term "high-risk artificial intelligence," which encompasses AI models capable of significantly impacting the daily lives and safety of citizens. This includes areas such as employment, credit assessments, and medical consultations.

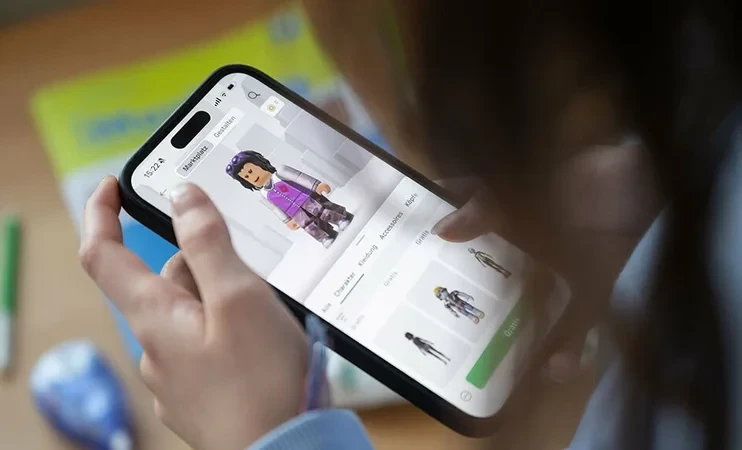

Organizations using high-risk AI technologies must inform their users that their services are based on AI and are also responsible for the safety of these services. Content generated using AI is required to contain watermarks indicating its artificial origin.

“Labeling AI content is a necessary precaution to prevent negative consequences, such as the creation of deepfakes,” emphasized a ministry representative.Companies with a global presence providing AI services in South Korea and meeting at least one of the following criteria: annual revenue exceeding 1 trillion won (approximately $681 million), domestic sales of 10 billion won, or 1 million daily users, must appoint a local representative. Currently, these requirements are met by companies such as OpenAI and Google.