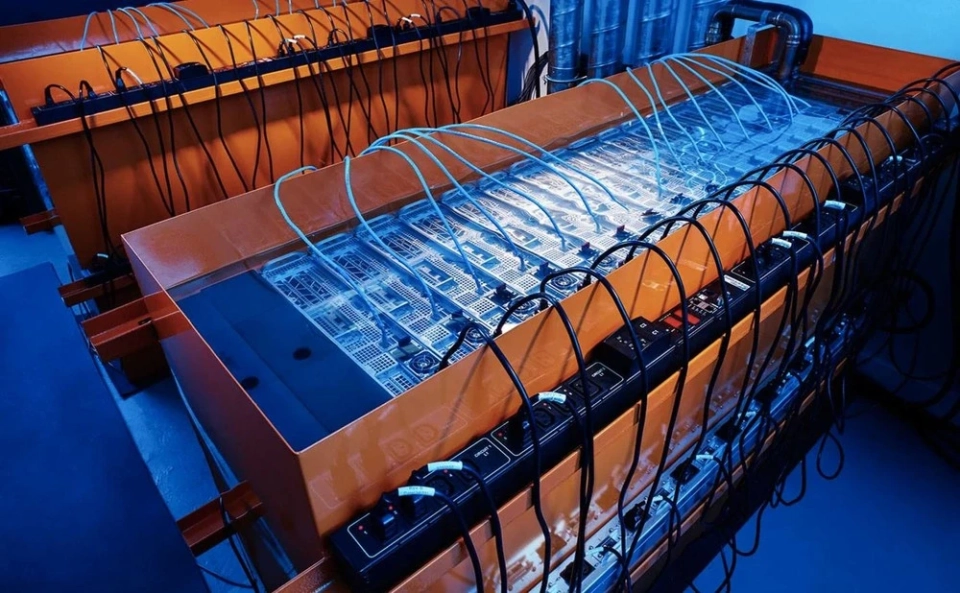

To better understand the scale of the problem, UNICEF draws an analogy: in every classroom, there is at least one child who has become a victim of such crimes.Deepfakes are media materials (photos, videos, audio) that are created or modified using artificial intelligence (AI) technologies and appear or sound real. Unfortunately, they are increasingly used to create sexualized content involving minors.

Researchers noted that children are well aware of the risks associated with the use of AI. In some countries where the study was conducted, approximately two-thirds of children expressed concern about the possibility of fake sexual images or videos being created featuring them.

UNICEF emphasizes that creating deepfakes with sexual undertones is a form of violence against children.

The organization also noted that it welcomes the efforts of those AI developers who strive to implement safety-oriented approaches from the very beginning, as well as reliable measures to prevent the misuse of their technologies.

However, many AI systems are developed without adequate safety mechanisms. The risks are exacerbated when generative AI tools are integrated into social networks, where such materials spread rapidly.

UNICEF urges AI developers to implement safety measures at the design stage and strongly recommends that all digital companies prevent the dissemination of materials containing child sexual abuse created using AI, rather than simply reacting to incidents that have already occurred.

The photo on the main page is illustrative: pngtree.com.